My video is here.

My paper is here.

Wednesday, April 25, 2012

Saturday, April 21, 2012

Sphere Test

After much tribulation, I have managed to render a soft sphere! It turns out I had, in my somewhat sleep-deprived state, forgotten to check if I was hitting a zero-density voxel and skipping some computation if I hit a zero-density voxel. Without that check I was rendering very strange looking black cubes with sphere-esque blobs inside them. In any case, I am feeling much better about the state of my project than I was even just a few minutes ago.

Noisy Raymarch

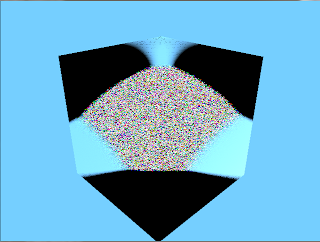

Here is an attempt to render a spherical cloud using raymarching, now that I've resolved my 3D texture issue. I'm yet not sure why that noise is appearing nor am I certain as to why it does not look quite spherical. Still, it's an improvement over the black cube I was seeing before.

Here's another render (this time with what looks like an ellipse but what should be a sphere):

Texture Generation

Well, it turns out that, as I suspected, the source of my problems was the texture generation after all. I was using the variable GL_R instead of GL_RED when passing float data from my voxel buffer to the shader, which is meant to be used in the context of a texture coordinate rather than color. I'm a bit frustrated that it took me three days to track this bug down... At least I have this knowledge for any future OpenGL projects I might work on.

Wednesday, April 18, 2012

Raymarching Uncertainty

I've implemented raymarching code in the fragment shader. However, at the moment the object that is supposed to be my cloud is appearing as a black cube. I know why this is occurring; the function that samples density data from the 3D texture I output to the shader always returns 0. I can think of two reasons why this is occurring:

1) The texture I'm sending to the shader is being created incorrectly

2) My sampling function is wrong.

I'm inclined to believe that my texture is being made incorrectly, since I'm using a sampling function similar to the one I used when making a volumetric renderer in CIS 560. Now off to figure out why my texture is wrong...

1) The texture I'm sending to the shader is being created incorrectly

2) My sampling function is wrong.

I'm inclined to believe that my texture is being made incorrectly, since I'm using a sampling function similar to the one I used when making a volumetric renderer in CIS 560. Now off to figure out why my texture is wrong...

Wednesday, April 11, 2012

Fragment Shader Raycasts

I figured out how to raycast in my fragment shader! I discovered the gl_FragCoord variable, which returns the coordinates of the current fragment in screen space. By passing the shader the screen's dimensions I can convert this number into normalized device coordinates and create a 3D ray based on the fragment and view matrix.

I've tested my raycasts by coloring each fragment with the X, Y, and Z components of the ray it generates. So far everything looks correct. Next step: getting a raymarch to work!

As an added bonus, it's already given that each ray generated will hit the cloud's bounding box since they're only generated from fragments. I can skip that particular test during my raymarch now, since it would actually serve to slow down my renders rather than speed them up as it would in an offline renderer.

Rays as RGB:

I've tested my raycasts by coloring each fragment with the X, Y, and Z components of the ray it generates. So far everything looks correct. Next step: getting a raymarch to work!

As an added bonus, it's already given that each ray generated will hit the cloud's bounding box since they're only generated from fragments. I can skip that particular test during my raymarch now, since it would actually serve to slow down my renders rather than speed them up as it would in an offline renderer.

Rays as RGB:

Monday, April 9, 2012

GL Bug Fixed

Earlier this week I encountered some trouble getting just a simple cube to render in my GLUT window. I thought I was doing something wrong when initializing my GL viewport, since I was only able to see the background color of my scene. It turns out that my program wasn't properly setting a value for the unsigned int used to represent a varying vec4 in my shader program, and as such none of my vertices were being placed anywhere in the GL scene. To fix this I just defined its location myself using layout(location) in its definition in my vertex shader. Now I can actually see the geometry I generate and know that my camera controls are working properly. Next step: change my fragment shader to use raymarch code to render a cloud.

Sunday, April 1, 2012

Midpoint Progress

I've made up some slides outlining my work and what I still have to make progress on. This week I attempted to create an offline volumetric renderer of my cloud data, thinking that it would be easy since I had made one before. Turns out that task was harder than I expected and all I have to show for my efforts is a black rectangular blob on a blue background...

Presentation

Presentation

Saturday, March 31, 2012

3/31 Progress

I ought to get in the habit of posting to this blog regularly.

Since my last post I've been working on coding a cloud generator using a voxel buffer based on chapter 2 of Game Engine Gems 2. I've decided that, rather than modifying my existing volumetric renderer code, I will just code a new C++ project from scratch. I think the final product will differ too much from my old volumetric renderer to make it worth modiying pre-existing code.

I also need to do more reading on 3D textures. I've decided that, in the long run, learning more about GLSL will be better than attempting to code the renderer in CUDA. I enjoy coding in GLSL more and, as much as it pains me to admit it, I am not adept at writing efficient CUDA code. As such, I will be rendering the clouds I generate via 3D textures and raymarching in GLSL.

For Monday I expect to have offline rendering of my cloud generation.

Since my last post I've been working on coding a cloud generator using a voxel buffer based on chapter 2 of Game Engine Gems 2. I've decided that, rather than modifying my existing volumetric renderer code, I will just code a new C++ project from scratch. I think the final product will differ too much from my old volumetric renderer to make it worth modiying pre-existing code.

I also need to do more reading on 3D textures. I've decided that, in the long run, learning more about GLSL will be better than attempting to code the renderer in CUDA. I enjoy coding in GLSL more and, as much as it pains me to admit it, I am not adept at writing efficient CUDA code. As such, I will be rendering the clouds I generate via 3D textures and raymarching in GLSL.

For Monday I expect to have offline rendering of my cloud generation.

Wednesday, March 14, 2012

Description / Proposal

I propose to extend my volumetric renderer from CIS 560 by adding a more realistic cloud generator, rendering the cloud(s) with the GPU, and altering the cloud lighting formula. I will be basing my work on Frank Kane’s chapter of Game Engine Gems 2, which describes techniques for modeling, lighting, and rendering clouds in real time. The cloud generator Kane describes stores three different bits of data (vapor, phase transition, and cloud) in each cell of a voxel grid in order to procedurally generate a cloud within the grid. The grid is initially randomly seeded with vapor bits. When the cloud generation algorithm is run, during each iteration the three different cell attributes are looked at and the cells are updated based on their data and the data of nearby cells. When a cell contains a vapor bit and a cell next to or below (but not above) it has a transition bit, that cell also gains a transition bit and loses the vapor bit. When a cell has a phase transition bit, it gains the cloud bit. Additionally, a small probability is coded to make cells lose or gain certain bits, in order to represent cloud particles evaporating or more water condensing. This random function is necessary for the formulation of a cloud since the initial cloud grid contains only vapor cells; phase transitions must be randomly created during iterations of the algorithm.

Once the algorithm has generated cloud data for the grid, the cloud can be rendered. Kane describes three different methods of doing this: volumetric splatting, volumetric slicing, and ray marching. At the moment I plan on implementing just ray marching (I think it would look the most realistic). Before the actual rendering can begin, though, the cells’ lighting must be calculated. By using a multiple forward scattering technique to simulate the way light is dispersed through a cloud, the lighting of each voxel can be computed before the raymarch. After computing the lighting of a single voxel, one can use its light value to then compute the lighting of neighboring voxels, since light scattering is not contained to just one small section of the cloud.

The volumetric renderer will, in addition to the main cloud generator and renderer, support various types of lights: point lights, directional lights, spot lights, and area lights. After all, a cloud will look less realistic if there is nothing with which to light it. Multiple lights in one scene will also be supported.

As for why there is a need for my project: real-time rendering and generation of volumetric clouds is useful for games. It makes the game look more realistic.

Subscribe to:

Posts (Atom)